The world’s high demand for more data continues to grow at a CAGR of 25% each year according to the latest Cisco report. In addition to consumers’ high demand, traffic inside of the data center continues to increase at a similar pace. In fact, more traffic is created by the data center itself than by consumers. Network function virtualization (NFV) and the hyper scaling of data centers means that traffic is continually, dynamically allocated across the data center, ensuring that resources are maximized. All the increasing internet traffic means that technology inside the data center must be faster and more efficient.

Figure 1: NFV and software defined networks (SDN) lead to significant changes inside the data center from a technology standpoint.

100G gives way to 400G and eventually 800G

How fast data moves across the entire infrastructure is the key to keeping up with consumer demand for more data. Less than 10 years ago, the backbone of the infrastructure was based on 40 Gbit/s technologies. 40 Gbit/s gave way to 100 Gbit/s which started as 10 lanes of 10 Gbit/s and then transformed to 4 lanes of 25 Gbit/s. However, 100 Gbit/s is not fast enough for the changes that are coming to the world. Technologies such as virtual reality, autonomous driving, and big data mean the need for more data at lower latencies. As a result, research began on how to get to 400 Gbit/s.

The big debate for 400 Gbit/s was whether traditional signalizing (NRZ) would be used or if a new technology (PAM-4) would displace NRZ signaling. Standards bodies such as IEEE and CEI spent significant time discussing both approaches and ultimately decided to move to PAM-4 signaling as the de facto technology for 400 GbE. This means that the change to 400 GbE was revolutionary and not evolutionary. As a result, much of the ecosystem remains to be built out. 100 GbE is low cost and reliable, where moving to 400 GbE has yet to achieve the cost and reliability to truly see the entire infrastructure move to it. Evidence of a stall of the 400 GbE ecosystem really showed when Facebook announced that it would continue to build data centers based on 100 GbE technologies.

Even with a slight slowdown in the 400 Gbit/s build out of data centers, it doesn’t mean that technology is still progressing. As 5G begins to take over the cell phone networks, even more stress will be put onto the data centers and technology is being developed for 800 Gbit/s! Currently 800 GbE will be rolled out in two phases, with the first phase being 8 lanes of 100 Gbit/s technology and eventually changing to 4 lanes of 200 Gbit/s PAM-4 signaling. While 800 GbE might appear evolutionary, moving to 200 Gbit/s PAM-4 signaling will bring plenty of challenges for developers. Today at 100 Gbit/s we see huge signal to noise challenges and margins that are continually stressed. We see the need for forward error correction and equalization as bit error rates have shrunk from 10E-12 to 10E-5 (uncorrected). Now imagine how difficult doubling that data rate will be.

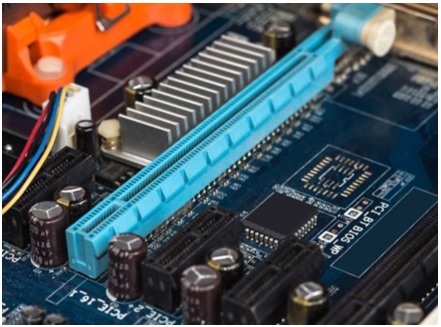

PCIExpress moves from Gen4 to Gen5 and PAM4

To this point we have discussed the ethernet standards, but that is not the only place where we will see technologies having to go faster. PCIExpress has been one of the fundamental building blocks for high speed digital technologies. PCIExpress has been stuck at Gen4 for nearly a decade, however Gen4 it is not fast enough to handle 400 GbE. PCIExpress needed to adopt and get faster, as a result the PCIExpress consortium announced Gen5, which doubled the speed to be able to meet the 400GbE needs. The move to Gen5 meant that speeds increase from 16 Gbit/s to 32 Gbits/s and that margins are shrinking further.

Figure 2: PCIExpress will move 4 times faster over the next five years.

The most profound challenge for PCIExpress Gen5 will be how to distinguish between a 1 and a 0 at the end of the channel. To characterize this behavior, developers use the real time eye measurement. For Gen4 the measurement had a size of 20mV. While this is small, it was possible to measure the real-time eye. As we move to PCIExpress Gen5, the specification shrank to 14 mV! That is incredibly small and it is after de-embedding the impact of the channel and equalizing the eye. Simply put, the move to Gen5 will not be easy. Even more difficult will be the move to PCIExpress Gen6. It has been announced that to keep up with 800GbE, PCIExpress will move to PAM-4. This is a major change and will mean new learnings and test equipment across the entire ecosystem.

New technologies emerge to improve throughput

As PCIExpress took many years to get to Gen5 and >20 Gbit/s, the industry needed faster speeds. As mentioned in the introduction, software defined networks and network function virtualization are taking over the data center and this creates demand for machine learning. With machine learning comes the need for very fast communication between the processor and all the accelerators, including the graphics processing unit (GPU). Machine learning has also brought large demand for GPUs across the data center. The need for faster speeds created a need for new technologies that could handle machine learning better than PCIExpress. Enter Cache Coherent Interconnect (CCIX) and Compute Express Link (CXL) into the technology mix.

So why the need for new technologies? The main driver behind these technologies, outside of the need for faster speed, is the need for cache coherency. Essentially allowing for easier access to the caches of the processors and the accelerators. Cache coherency is a key component for enabling faster machine learning as the components of the server are in constant communication. Both CXL and CCIX promise cache coherency along with speeds that will meet the demands of the 400Gbe infrastructure. They plan to co-exist with PCIExpress.

Conclusion

Technologies inside the data center are moving to faster, more efficient technologies. The move to 400 GbE is revolutionary and means that even high speed digital technologies are impacted. Developers must keep up with the changes to be able to satisfy consumer’s constant demand for more data with less latency and at faster speeds.