We all strive to, “Get it right the first time,” in our designs, and focus on skills, techniques and tools to help us achieve this. But we should also pay attention to the skills, techniques and tools to “Get it right the second time.” This is a phrase Dave Graef, CTO and General Manager of Teledyne LeCroy likes to use.

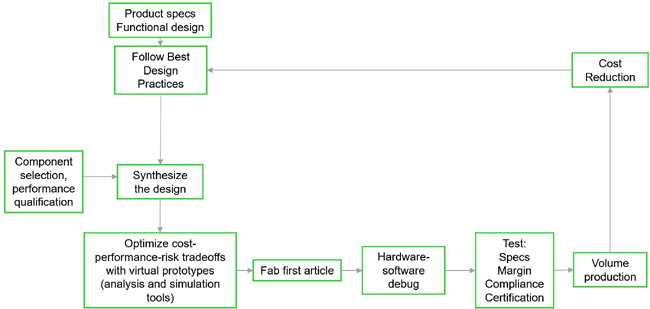

Getting it right the first time is about a robust design process that identifies the potential problems and designs them out right at the beginning. Analysis is performed based on component and design tradeoffs to balance performance, cost, schedule and risk factors. A final system level analysis is usually performed to make sure there are no surprises. Figure 1 illustrates a stripped down process flow to get it right the first time.

Figure 1. Process flow for getting it right the first time.

The starting place to get it right the first time is a set of robust best design practices. These are created specifically to avoid the common, known problems in high speed products like reflection noise, cross talk, ground bounce, attenuation, mode conversion and power rail noise.

These best practices are the basis of synthesizing the initial design. But every product is custom. This means there will always be some tradeoffs to make based on the performance requirements and meeting the cost, schedule and risk constraints. This is where analysis tools like circuit and field solver simulators come in.

What is the optimum line to line spacing? Far apart, the cross talk is low, but the interconnect density is low. This may require more layers (cost) or a larger board (cost).

For the maximum line length and data rate, is the attenuation and resulting eye sufficiently open with low cost FR4, or will a more power hunger equalization method be required? Can it even be fabricated with FR4 or is a more expensive laminate needed?

With accurate starting information about the components and materials, and a robust modeling and simulation process, there should be a high probability of success the product will work as predicted in the first article.

It should pass the initial bring up functional tests, certification tests and be released to manufacture. Over the longer term, the design and component selection can be cost reduced over the lifetime of the product.

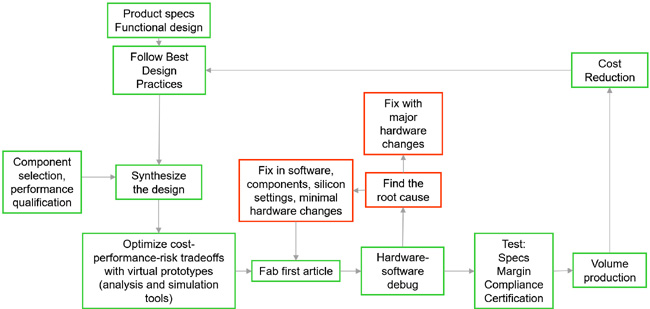

But what if it doesn’t work the first time when you do the initial tests of the first article? We need a process to “get it right the second time.”

This is where measurements play an essential role. The basic process to get it right the second time is to identify the problem, find the root cause and fix the problem either in software or hardware at the root cause.

Sometimes it’s a simple fix and the first article can be slightly modified to implement the fixes. Sometimes it requires more major changes. Figure 2 illustrates the beginning of the “Get it right the second time” process flow.

Figure 2. The initial steps to "get it right the second time."

The key starting place is finding the root cause. This is a process no different than how a doctor diagnoses an illness or how a detective finds the killer. I like to refer to it as “forensic analysis.” By no coincidence, this is also the topic of one of my presentations at the EDI Con 2016 in the Frequency Matters Theater. You can download a copy of the extended notes from my talk by clicking here.

Forensic analysis is about using all the information available to you to search for clues that might suggest a suspect and then interrogating them by asking consistency questions. This is really about using measurements. The scope is the workhorse instrument to sniff out problems and search for clues.

One step in the search for the root cause is to follow Captain Renault’s advice from the 1942 classic movie, Casablanca, “Round up the usual suspects.” At the top of the list are the common problems like, is it plugged in, is the voltage acceptable on all the power rails, is the power rail noise acceptable, is reflection noise acceptable, is there excessive cross talk, especially when signals switch, is the jitter from PLLs or in the data pattern within spec?

Every hardware designer has their own list of the usual suspects and we use scope measurements to interrogate each one to see if it is potentially the guilty party.

There are other techniques we commonly use in forensic analysis and you can see some of them in my presentation handout.

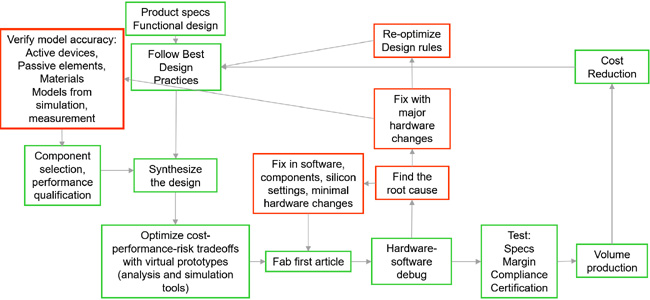

It’s just as important to have confidence in who the killer is as it is to fix the problem. If you make some changes and the problem goes away, you will never be able to confidently use the information to help you in the next design. This feedback is vital to optimize your “get it right the first time” process flow. Figure 3 shows how we can use the accurate information about the root cause to adjust the best design process and the quality of the models used as input to predict performance.

Figure 3. The complete integrated process flow to “get it right the first time” and to “get it right the second time.”

When the pressure is on to fix the product and ship it, it’s sometimes difficult to keep in mind that if we give equal attention to the “get it right the second time” process, we increase the odds to “get it right the first time” in the next product.